Last year, in a talk at Codemotion Berlin (see here) I described as one of the hurdles in keeping development productivity up when systems grow the poor model match between runtime and design time. Turns out that was an awfully abstract way of saying “well something like that”.

At last I am a little smarter know and I’d rather say it’s about the integratedness.

This post is about:

What makes us slow down when systems grow large, and what to do about it?

A lot of things happen when systems grow. And there is more work on this topic around than I could possibly know about. In fact, what I will concentrate on is some accidental complexity that is bought into at some early stage, then neglected, and typically accepted as a fact of life that would be to expensive to fix eventually: The complexity of updates as part of (generic) development turnarounds.

While all projects start small and so any potential handling problem is small as well, all but the most ignorable projects eventually grow into large systems, if they survive long enough.

In most cases, for a variety of reasons, this means that systems grow into many modules, often a distributed setup, and most-definitely into a multi team setups with split responsibilities and not so rarely with different approaches for deployment, operations, testing, etc.

That means: To make sure a change is successfully implemented across systems and organizational boundaries a lot of obstacles – requiring a diverse set of skills – have to be overcome:

Locally, it has to be made sure that all deployables that might have been affected are updated and installed. If there is a change in environment configuration this has to documented so it can be communicated. Does the change imply a change in operational procedures? Are testing procedures affected? Was there a change in the build configuration? And so on.

Now suppose for an arbitrary change (assuming complete open-mindedness and only the desire for better structure) there is n such steps that may potentially require human intervention or else an update will fail. Furthermore assume that we have some minimal probability p that we run into failure. Then the probability that an update succeeds is at most:

(1-p)^n

What we get here is a geometric distribution on the number of attempts required for a successful update. That means, the expected number of attempts for any such update is:

1/(1-p)^n

which says nothing else but that

Update efforts grow exponentially with the number of obstacles.

While the model may be over-simplified, it illustrates an important point: Adding complexity to the process will kill you. In order to beat an increasing n, you would have to exponentially improve in (1-p) which is … well … unlikely.

There is however another factor that adds on top of this:

In reality it all works out differently and typically into a sort of death spiral: When stuff gets harder because procedures get more cumbersome (i.e. n grows), rather than trying to fix the procedure (which may not even be within your reach) the natural tendency is be less open-minded about changes and rather avoid the risk of failing update steps altogether by constricting one’s work to some well-understood area that has little interference with others. First symptoms are:

- Re-implementation (copy & paste) across modules to avoid interference

- De-coupled development installations that stop getting updates for fear of interruption

Both of these happen inevitably sooner or later. The trick is to go for later and to make sure boundaries can be removed again later (which is why in particular de-coupling of development systems can make sense, if cheap). Advanced symptoms are

- Remote-isolation of subsystems for fear of interference

That is hard to revert, increases n, and while it may yield some short term relieve, it almost certainly establishes an architecture that is hard to maintain, makes cross-cutting concerns harder to monitor.

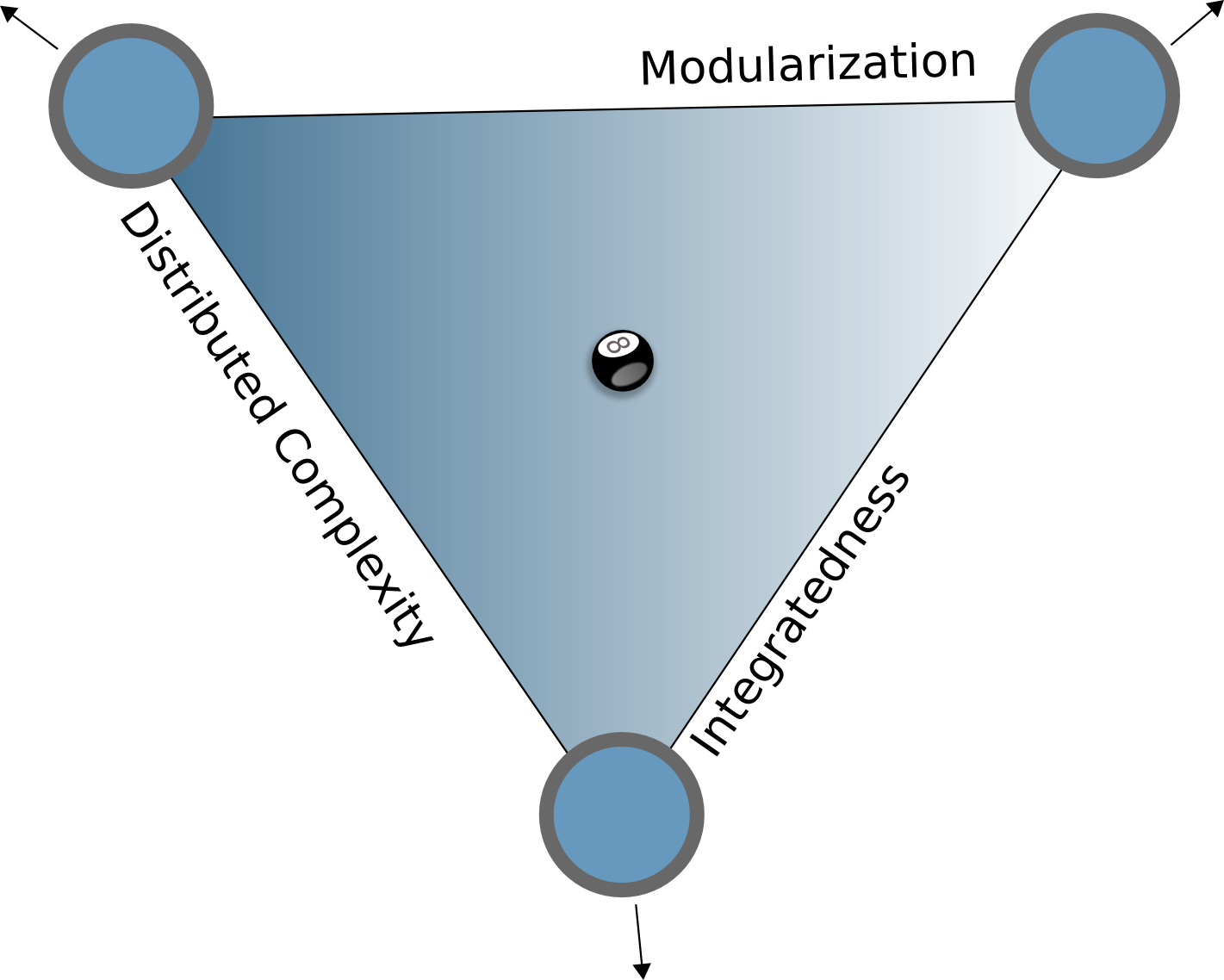

With integratedness of the system development environment, I am referring to small n‘s and small p‘s. I don’t have a better definition yet, but its role can be nicely illustrated in relation to to other forces that come into play with system growth: The systems complexity and its modularity. While the system grows so does (normally) its overall complexity grow. To keep the complexity at hand under control we apply modularization. To keep the cost of handling under control, we need integratedness:

One classic example of an integrated (in the sense above) development and execution environment is SAP’s ABAP for its traditional ERP use-case. While ABAP systems are huge to start with (check out the “Beispiele” section in here), customers are able to add impressively large extensions (see here).

The key here for ABAP is: Stuff you don’t touch doesn’t hurt you. Implementing a change makes it available right away (n=1 for dev).

References

- Lines_of_Code (Beispiele), German Wikipedia

- how many lines of custom ABAP code are inside your system?, SAP SCN

- System-Centric Development